Facebook recently removed the French town of Bitche from its service. Obviously, the name Bitche is very close to an English swear word and a social media platform like Facebook is under pressure to remove offensive content from its platform. However, it’s also obvious that sentient humans would be able to distinguish Bitche from the swear word and not make such a mistake. Of course, a sentient human didn’t make this mistake. Facebook’s AI made the mistake.

This isn’t the end of the story though. News services such as CNN not only picked up the story, but they also stoke outrage by accusing Facebook of “real censorship”.

This is a formula we see play out more and more often. AI removes something from social media it should not have. An offended party cries censorship. Then media chooses to cover the removal, giving time and attention to the outraged parties. The social media company eventually fixes the problem, but not before the pointless damage is done.

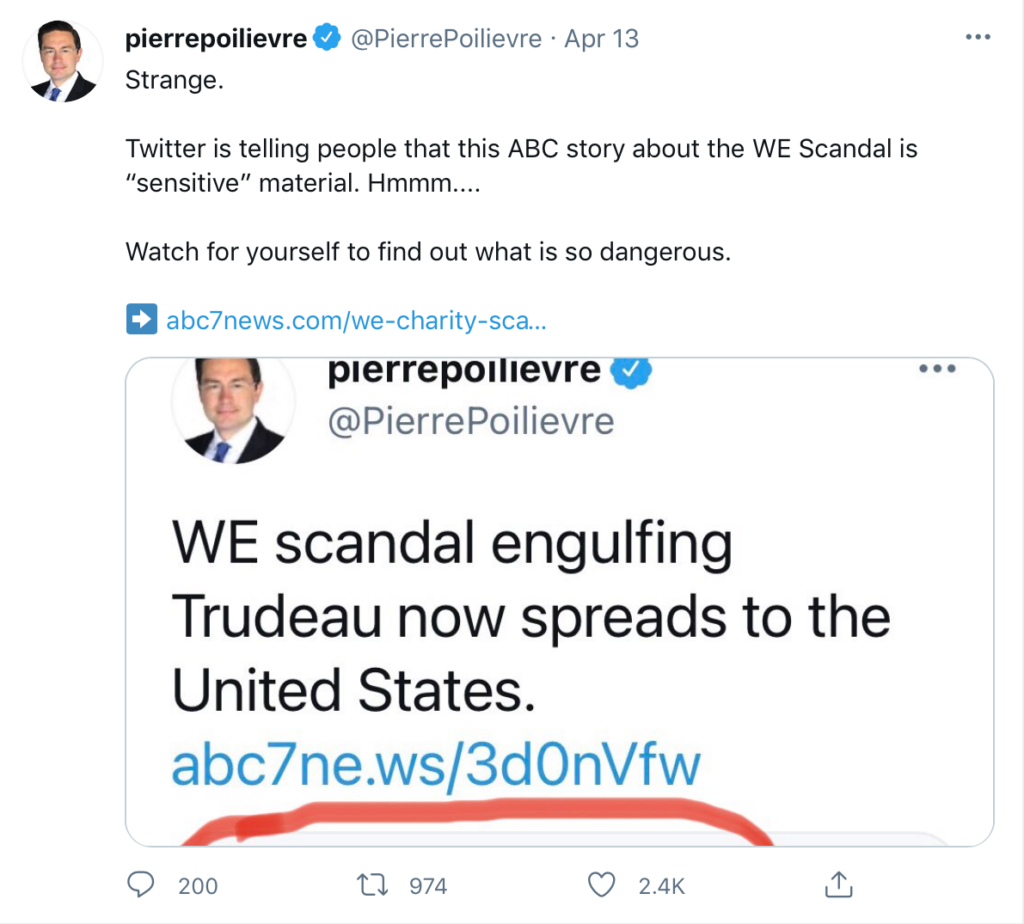

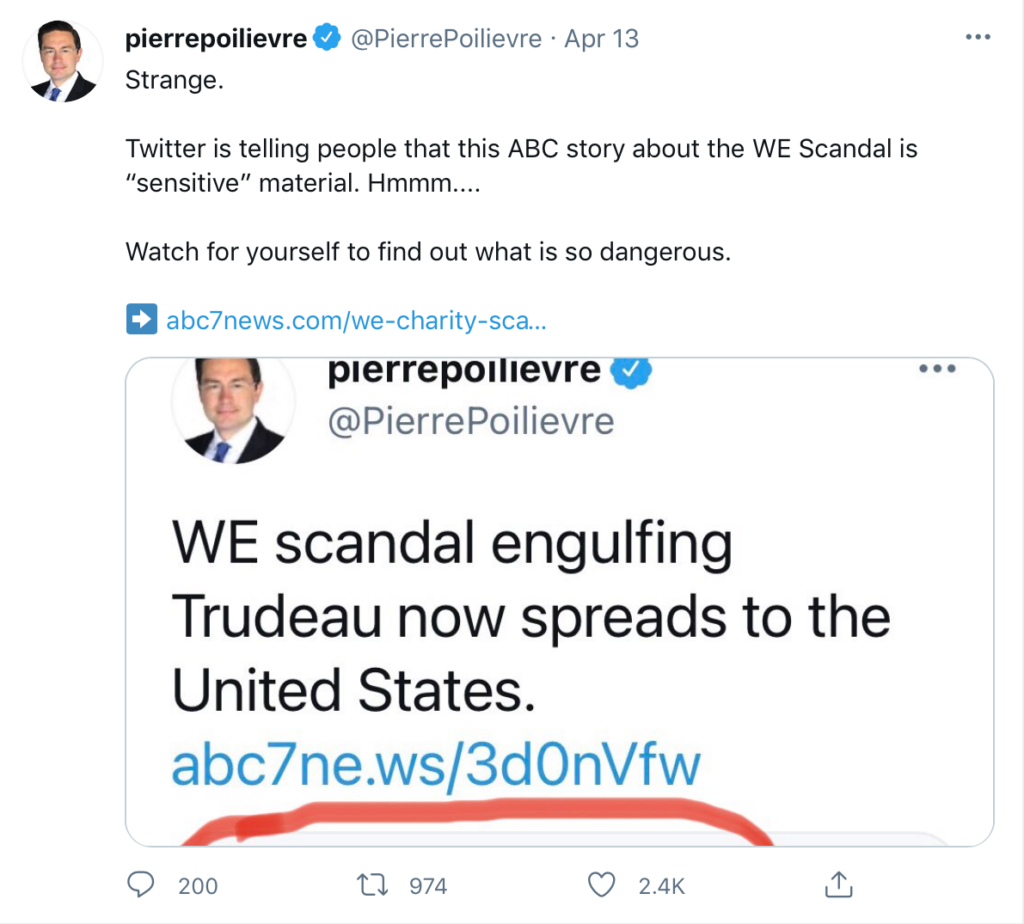

Here’s another, more obnoxious, version of this story. Pierre Poilievre, MP for Carelton and a man noted for once appearing in a series of YouTube videos so embarrassing that the Harper government scrubbed them from the Internet, shared a video about the very real WE scandal on Twitter. Twitter wrongly labelled the video as sensitive content, no doubt because words like “spreads” and “engulf” are associated with pandemic news. Poilievre then feigned outrage and set out to, ahem, engulf his followers in outrage.

AI does a dumb removal. People cynically use the removal to stoke outrage, pull eyeballs and drive a partisan agenda. What should we think about this?

Social media has an AI problem. We all know that AI makes dumb mistakes. Kuration once had problem promoting an article for a client on Facebook because the article mentioned cryptocurrency. Facebook had a policy against promoting cryptocurrencies (due to all the ICO scandals), but that wasn’t what the article was doing. Rather, a small section of the article was explaining a bit about how cryptocurrency works. That distinction didn’t matter to the AI.

Social media doesn’t have enough human supervision of AI. Know what’s more annoying than having your article flagged on Facebook for spurious reasons? Having your appeal rejected by some kind of chatbot. The message we received from Facebook regarding that cryptocurrency article was canned and either sent by an AI or sent by a human who didn’t have enough time to actually look into the issue.

AI can be gamed. Here’s how we solved our problem: we removed the word cryptocurrency from the article metadescription. We changed nothing else. It got approval from Facebook. Is it good that we got what we wanted? Yes. It is bad that it’s so easy to game the AI, especially when the AI is supposed to be doing things like tamping down on social media toxicity, flagging fake news, and protecting elections? Also yes.

Social media companies need AI. It’s just a fact that there’s no way to police social media spaces without AI. There’s far too much content for human eyeballs to sift though. Facebook generates 4 new petabytes of data per day. There are 95 million pictures and videos uploaded to Instagram every day. There are 350 million tweets tweeted per minute. Social media companies have an impossible problem: they make money from user generated content and engagement, but there is no way to police all that content and engagement without AI doing most of the heavy lifting.

But social media companies are the authors of their own misfortune. Companies like Twitter and Facebook chose to monetize the public square. Now they’re finding out that owning the public square comes with public responsibilities. These public responsibilities are expensive and they can be complex. Real courts spend a lot of time, money, and mental energy adjudicating the lines between free expression, civil liberties, financial interests, liabilities, and every other fuzzy democratic concept that makes a public square what it is. The idea that social media companies can do the same thing with some AI, too-small teams of beleaguered moderators, and committees calling themselves courts is not credible.

For the moment, the best we can do is be aware of bad AI content removals. And resist the urge the engage in outrage mongering. That is always a good policy anyway.